Can deep learning algorithms be applied to the metaverse? Virtual environments have served as the training ground for hard-to-get data for deep learning models like self-driving. Could we use some of the same architectures to distinguish objects in the metaverse? To test this hypothesis, I turned to a game I remembered fondly from my early years: the mass multiplayer online game, RuneScape.

RuneScape hasn't changed much since I played it 15 years ago. The 3d graphics are basic. Players perform tasks and kill monsters to increase their skills and purchase items from the in-game economy.

RuneScape was also the first game I really wrote code for. As a 13-year-old, I programmed bots to play the game for me: collecting items, performing tasks, and generating in-game gold. Eventually I started to write more sophisticated programs that used Java reflection and code injection. But after about a year, my bot farms were banned and I quit the game and moved on to different problems.

Data Collection

There are already ways to identify objects in game - but it requires code injection and Java reflection, both of which can be detected easily by the game's anti-cheating software. I chose to use some of those methods to collect the data. I collected about a hundred thousand in-game screenshots of labeled data with a bot programmed to walk around all of the in-game areas, switching the camera pitch and yaw and taking screenshots every few seconds.

There was a bit of extra work to downscale the images and some quality checks for object occlusion, but otherwise this part was pretty straight-forward. The labeled data set included about 800 different in-game objects: NPCs, interactive game objects, and decorative game objects.

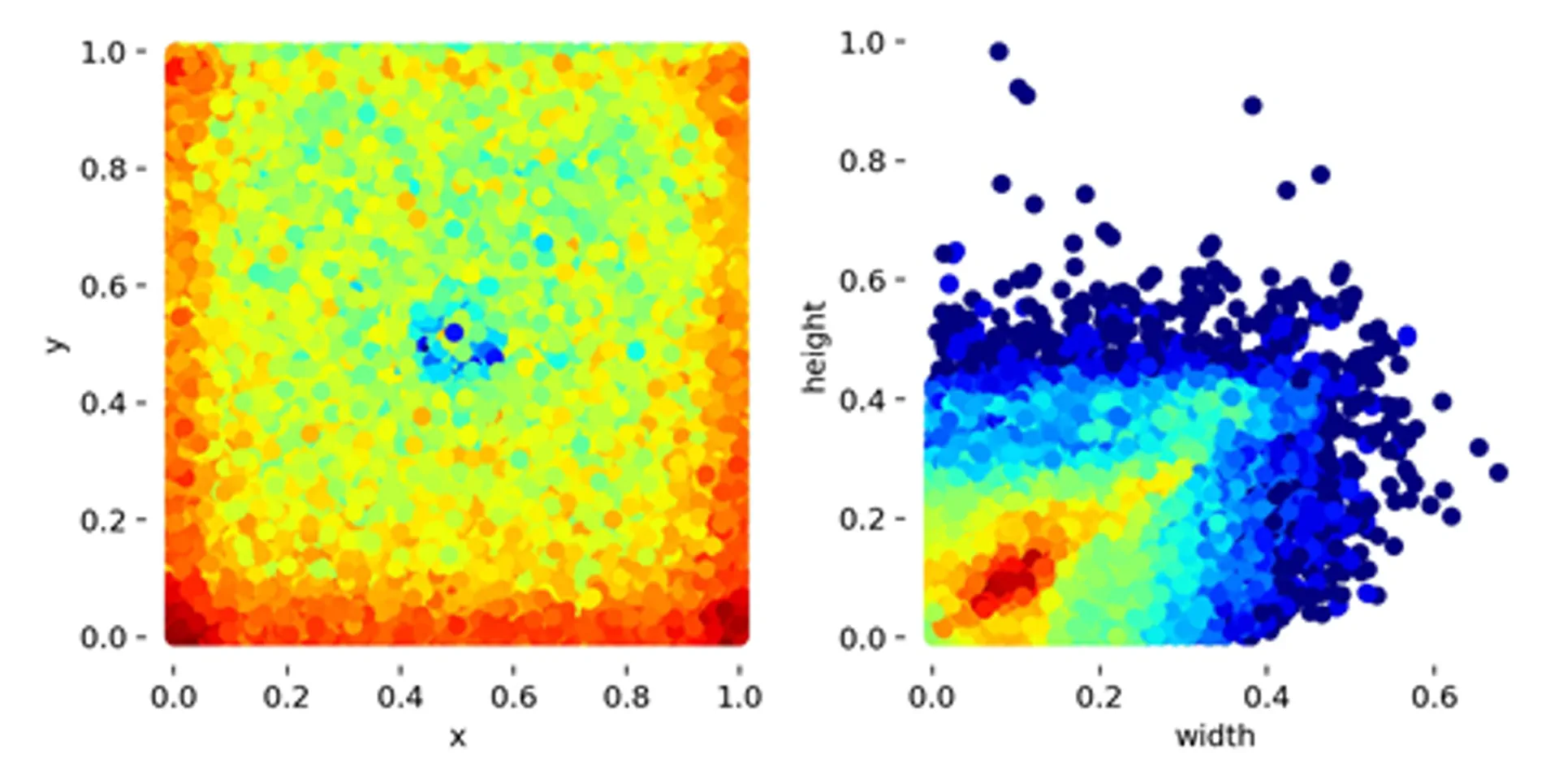

Dataset statistics

The Model

When evaluating the the type of architecture, I looked (1) to achieve 60fps inference on my personal hardware (64gb RAM, NVIDIA Titan X GPU) (2) to optimize for mean average precision (mAP) across the object classes. I settled on yoloV5, with a few modifications to tailor it towards the special 3d environment.

I tried with three separate models to get a baseline - first, with a small subset of classes, second, with a specific subset of classes, and finally, with all 800+ classes. I experimented with different image sizes, training it from scratch vs. pretrain weights, and batch size.

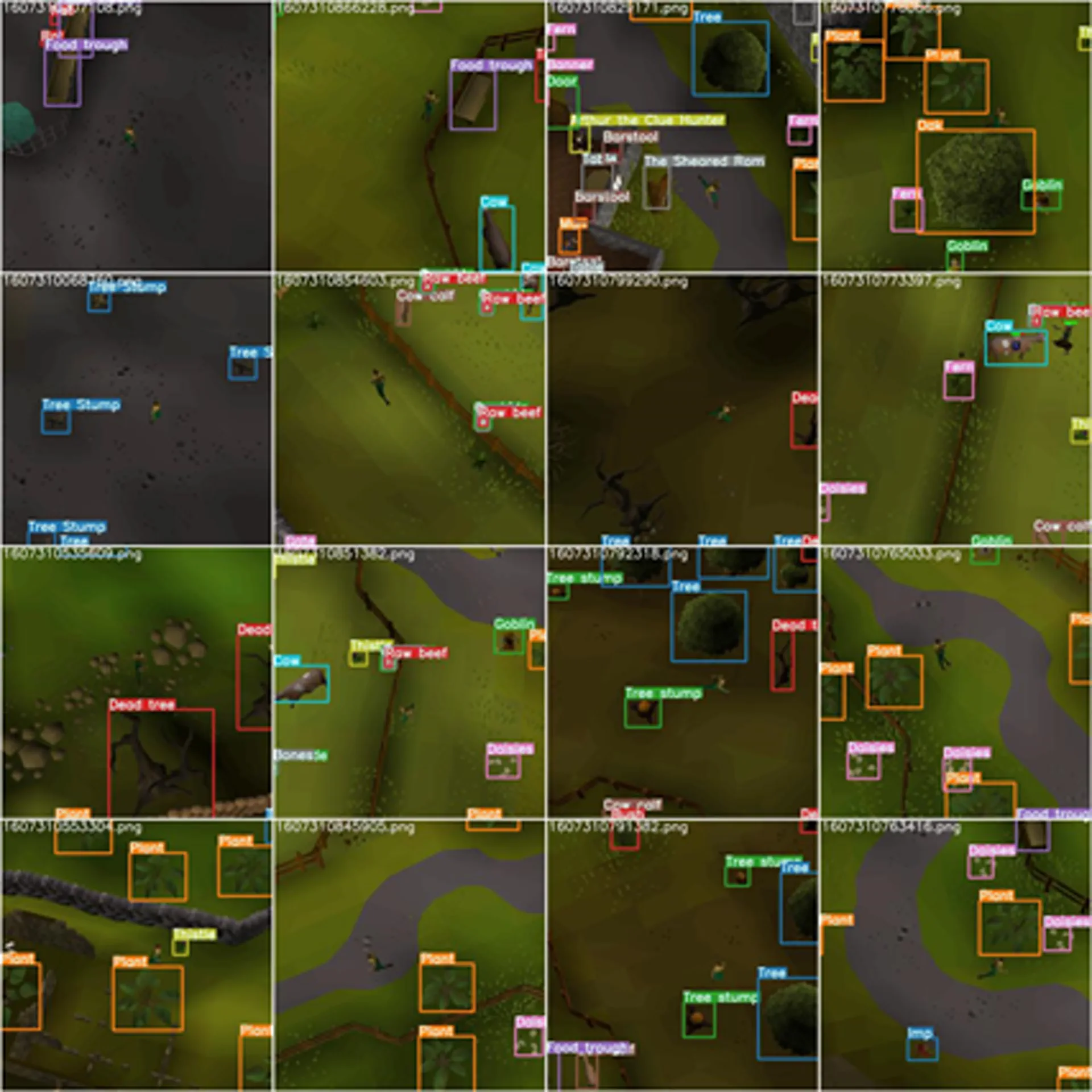

A typical batch

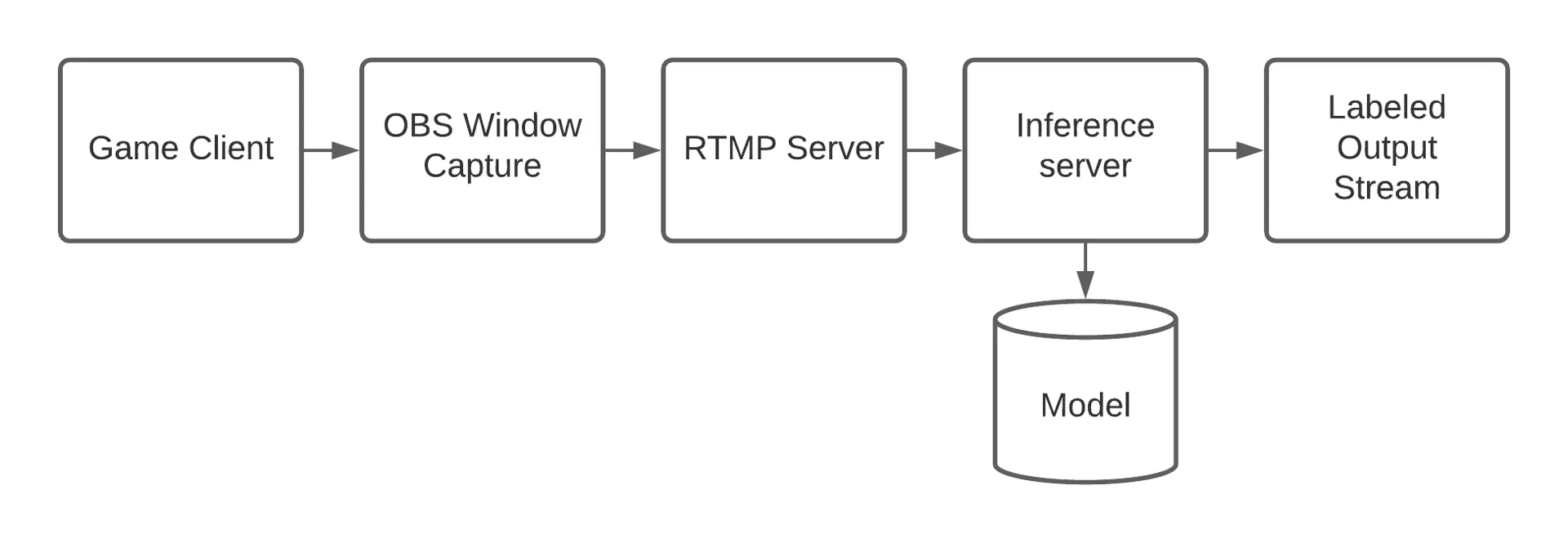

Online Inference Pipeline

Once the model was trained, I needed to figure out how to run online inference. I ended up using OBS to capture the game window, streaming that to a local nginx server with RMTP. The inference server could then connect to that stream, run predictions, create bounding boxes, and output a labeled stream.

Conclusion

The model seemed to work fairly well. Some classes had extremely high mAP, while a few might indicate some data quality or labeling issues. It was a fun project to work on, and it proves that these types of algorithms can generalize well to mass multiplayer online games.

You can find some of the code on GitHub here.