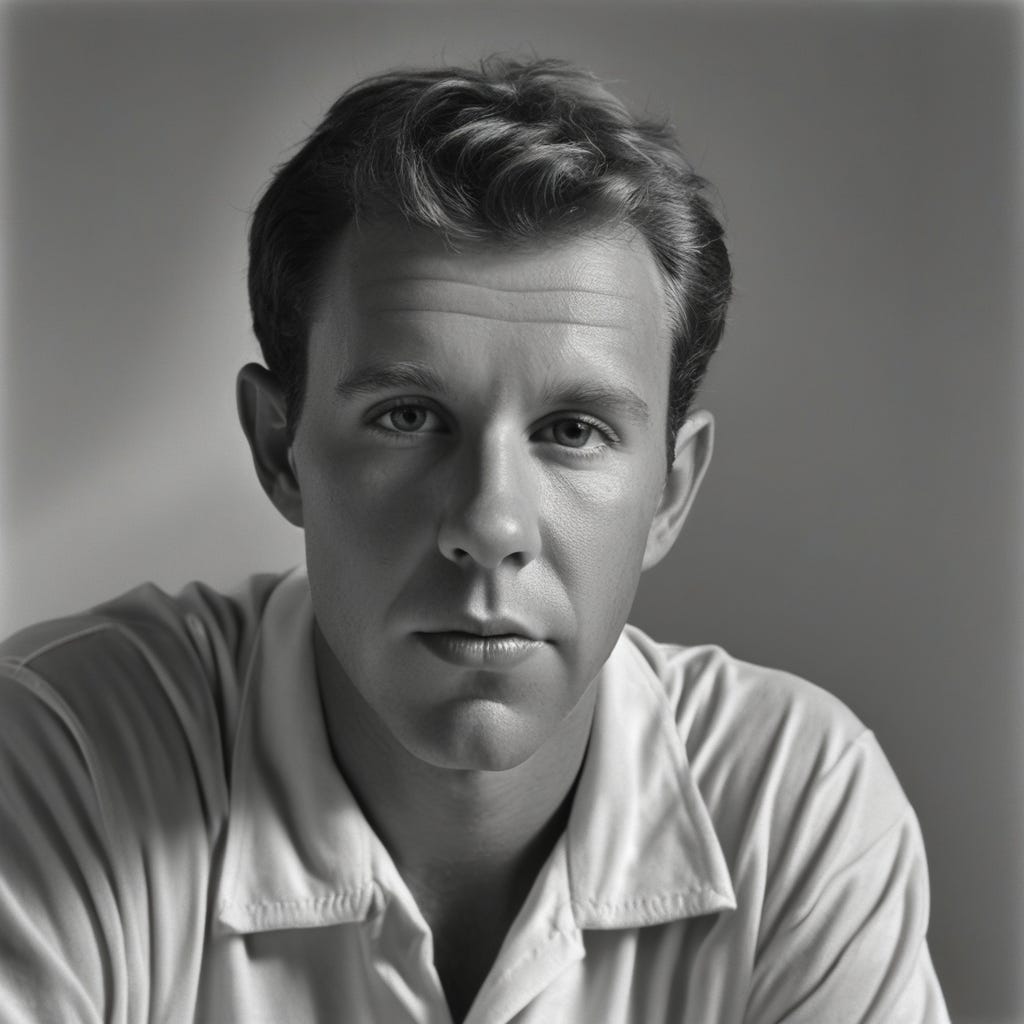

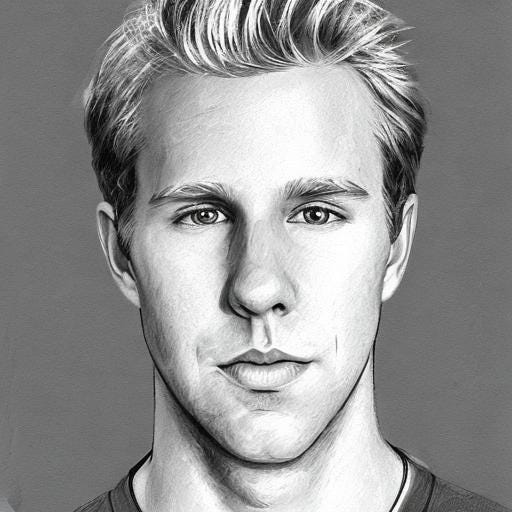

When the initial Stable Diffusion models first came out, I fine-tuned them to add myself as a new concept. I did this by using the DreamBooth algorithm via fine-tuning. It only took about 5-10 images of myself. The results were pretty good (learning a new token, “mattrickard” on Stable Diffusion v1.5)

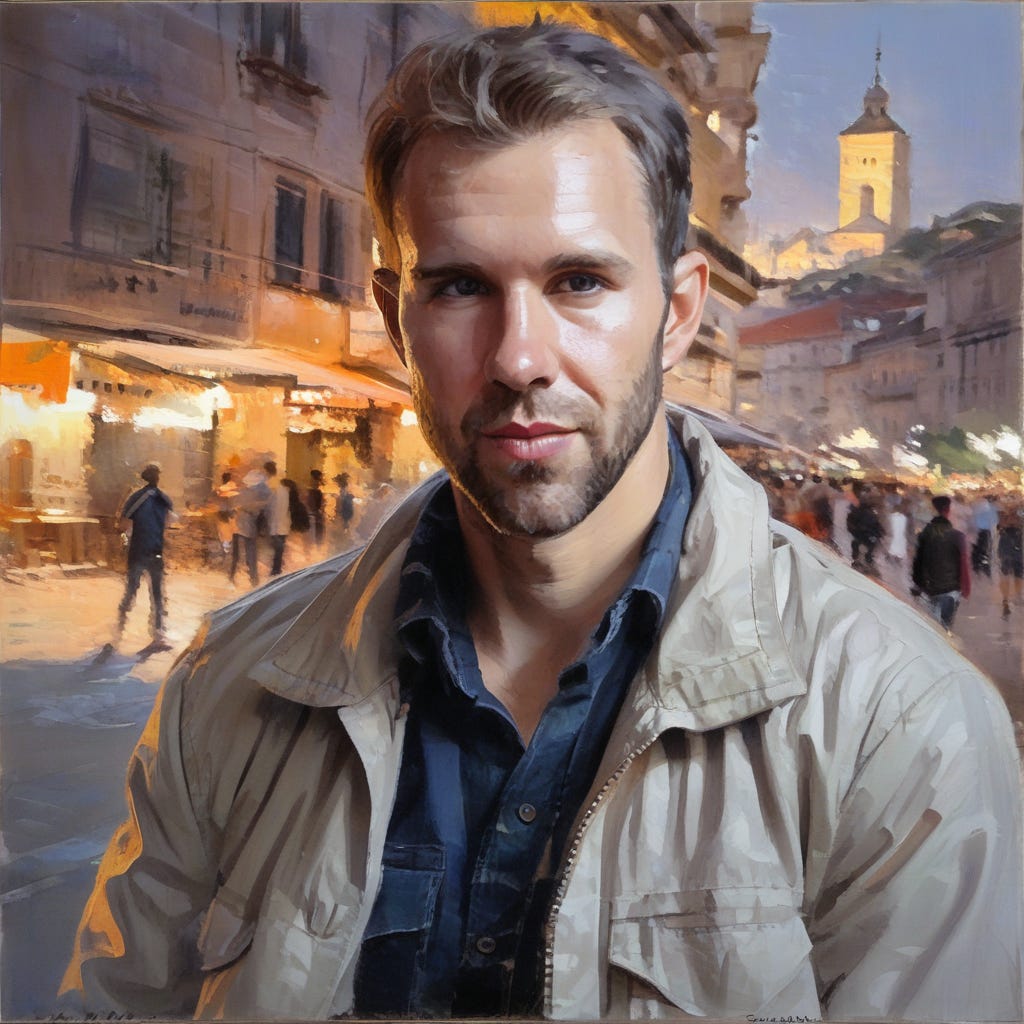

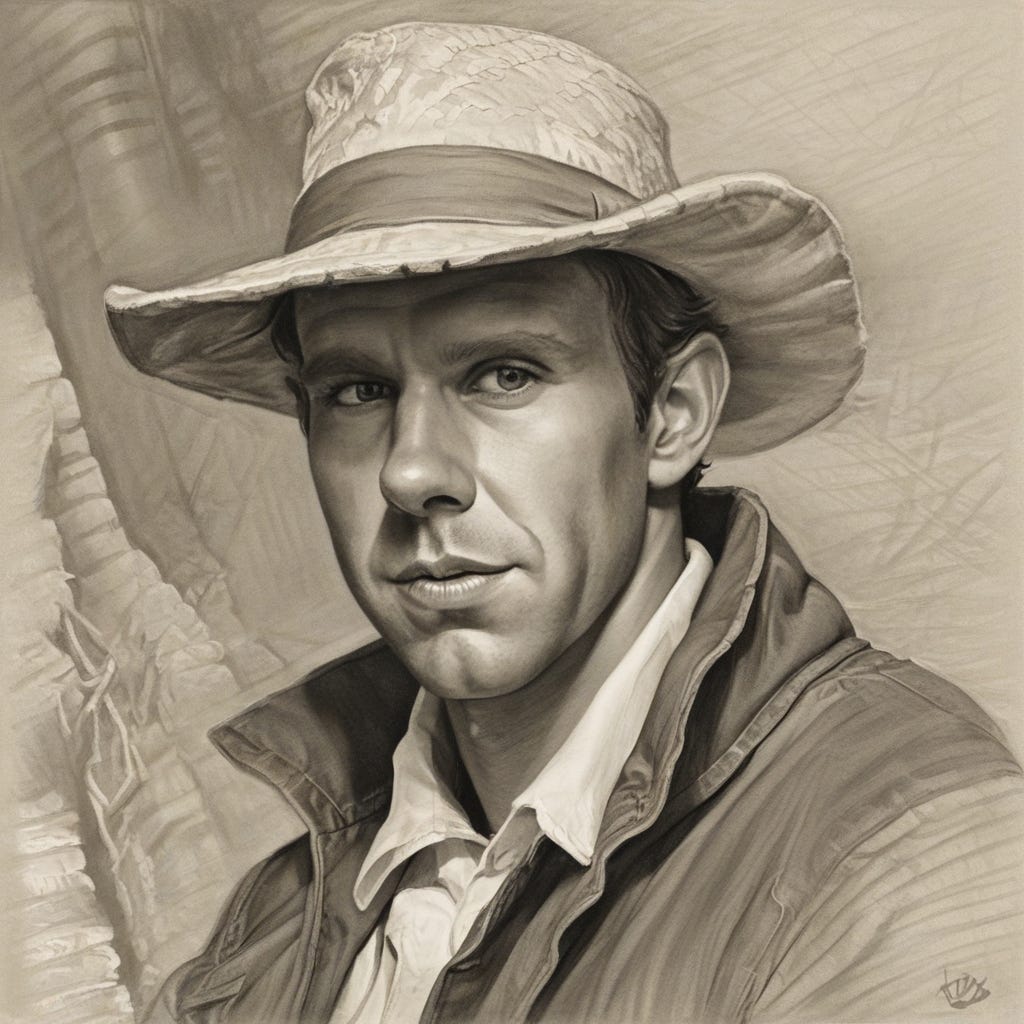

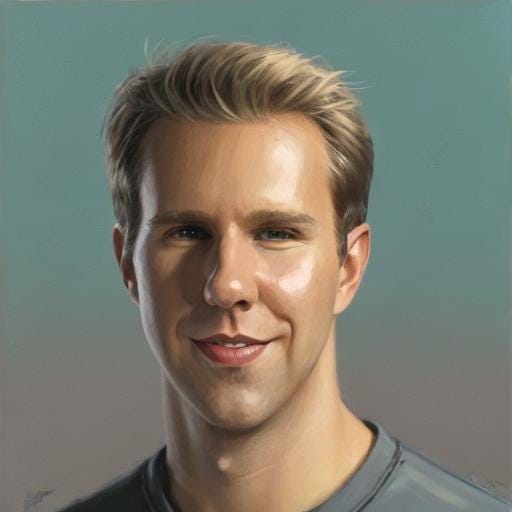

This time, I upgraded the model (SDXL) and upgraded the method (LoRA). Instead of fine-tuning, I was left with a relatively small (about 20 MB) of model weight deltas. I ended up doing LoRA applied to Dreambooth. There’s two methods I haven’t tried yet — textual inversion and pivotal tuning. The former adds a new token embedding and learns it via gradient descent. Pivotal tuning combines both textual inversion (training a new token) with Dreambooth LoRa (training a concept).

You can see how the models have improved in just a few months.